Radar-Camera Fusion System

Radar-Camera Fusion System

Overview

Radar-Camera Fusion is an early-stage research project from my master’s studies, exploring multi-sensor fusion techniques for enhanced target detection and tracking. This project implements a late fusion strategy that combines the complementary strengths of mmWave radar and camera sensors to achieve robust object localization and tracking in various scenarios.

Note: This was one of my initial research explorations during my master’s program. While I may not recall every implementation detail, the core methodology and system architecture remain documented here.

System Architecture

The system implements a late fusion strategy where radar and camera data are processed independently before being fused at the decision level. This approach leverages the strengths of both sensors:

- Camera: Provides rich visual information for object classification

- mmWave Radar: Offers accurate range, velocity, and robust performance in adverse conditions

Fusion Pipeline

Camera Stream Range/Azimuth Extraction

↓ ↓

ROI Crop Point Cloud Processing

↓ ↓

YOLO Detection Radar Point Cloud

↓ ↓

DeepSORT Tracking ↓

↓ ↓

Monocular Ranging ↓

↓ ↓

└────→ Data Fusion ←────┘

↓

Fused Target Info

Core Components

1. Camera Processing Pipeline

ROI Extraction

- Image Preprocessing: Frame capture and region of interest cropping

- Adaptive ROI: Dynamic region selection based on scene context

- Resolution Optimization: Balanced processing speed and detection accuracy

YOLO Object Detection

- Real-time Detection: YOLOv5 for fast and accurate object detection

- Multi-Class Recognition: Supports pedestrians, vehicles, and other objects

- Bounding Box Output: Precise object localization in image coordinates

DeepSORT Tracking

- Multi-Object Tracking: Maintains consistent IDs across frames

- Appearance Features: Deep learning-based appearance descriptor

- Kalman Filtering: Smooth trajectory prediction and association

- ID Management: Handles occlusions and re-identification

Monocular Distance Estimation

- Pinhole Camera Model: Geometric-based distance calculation

- Calibration: Camera intrinsic parameter calibration

- Height-Based Ranging: Estimates distance using object height and camera parameters

- Azimuth Calculation: Computes horizontal angle from image coordinates

2. Radar Processing Pipeline

Point Cloud Generation

- mmWave Radar: Processes raw radar data to extract point clouds

- Range-Azimuth-Elevation: 3D spatial information for each detection

- Velocity Information: Doppler-based velocity measurement

- SNR Filtering: Signal quality-based point filtering

Target Extraction

- Clustering: Groups radar points into distinct targets

- Centroid Calculation: Computes target center position

- Velocity Estimation: Extracts radial velocity for each target

3. Late Fusion Strategy

Spatial Alignment

- Coordinate Transformation: Converts camera and radar coordinates to common reference frame

- Calibration Matrix: Extrinsic calibration between camera and radar

- Temporal Synchronization: Aligns timestamps between sensors

Data Association

- Position Matching: Associates camera detections with radar points based on spatial proximity

- Gating Threshold: Defines acceptable matching distance

- Confidence Weighting: Combines detection confidences from both sensors

Fused Output

- Enhanced Localization: Improved position accuracy by combining both sensors

- Velocity Information: Radar-provided velocity enriches camera detections

- Robust Detection: Maintains tracking even when one sensor fails

Technical Highlights

Advantages of Late Fusion

- ✅ Modularity: Independent processing allows easy sensor replacement or upgrade

- ✅ Robustness: System continues functioning if one sensor fails

- ✅ Flexibility: Can adjust fusion weights based on environmental conditions

- ✅ Interpretability: Clear understanding of each sensor’s contribution

Challenges Addressed

- Calibration Complexity: Precise spatial and temporal alignment between sensors

- Coordinate Transformation: Accurate mapping between different sensor coordinate systems

- Association Ambiguity: Resolving which radar points correspond to which camera detections

- Computational Efficiency: Real-time processing of dual sensor streams

Applications

The radar-camera fusion system is particularly well-suited for scenarios requiring both visual recognition and precise ranging:

Autonomous Driving

- Pedestrian Detection: Enhanced detection accuracy in various lighting conditions

- Vehicle Tracking: Robust tracking with velocity information

- Obstacle Avoidance: Reliable distance measurement for path planning

Intelligent Surveillance

- Perimeter Security: Combined visual identification and range verification

- Intrusion Detection: Multi-modal confirmation reduces false alarms

- Crowd Monitoring: Track multiple targets with unique identities

Smart Transportation

- Traffic Flow Analysis: Vehicle counting and speed measurement

- Parking Management: Occupancy detection with vehicle classification

- Intersection Monitoring: Multi-target tracking at complex scenarios

Robotics

- Navigation: Obstacle detection and avoidance

- Human-Robot Interaction: Safe distance maintenance and gesture recognition

- Object Manipulation: Precise localization for grasping tasks

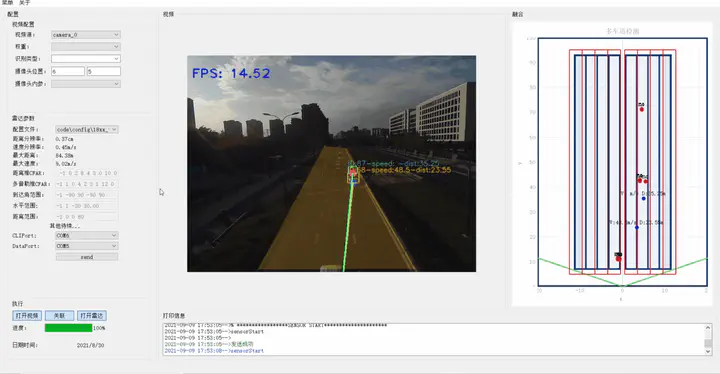

System Demonstration

The demonstration shows the system’s ability to:

- Detect and classify objects using camera-based YOLO

- Track multiple targets with DeepSORT

- Fuse camera-derived positions with radar point clouds

- Provide accurate range and velocity information

Technical Specifications

Camera Module

- Resolution: 1920×1080 (Full HD)

- Frame Rate: 30 FPS

- Field of View: 60° horizontal

- Detection Network: YOLOv5

- Tracking Algorithm: DeepSORT

Radar Module

- Frequency: 77 GHz mmWave

- Range Resolution: 0.1-0.2 m

- Velocity Range: ±50 m/s

- Angular Resolution: 15° (azimuth)

- Update Rate: 10-20 Hz

Fusion Performance

- Latency: < 100 ms (end-to-end)

- Position Accuracy: ±0.3 m (fused)

- Tracking Range: 0.5-50 m

- Max Targets: 10+ simultaneous objects

Lessons Learned

This early research project provided valuable insights into multi-sensor fusion:

- ✅ Complementary Strengths: Camera excels at classification, radar at ranging

- ✅ Calibration is Critical: Accurate sensor alignment is essential for fusion

- ✅ Late Fusion Trade-offs: Simpler implementation but may miss low-level correlations

- ✅ Real-time Challenges: Synchronization and computational efficiency are key

Future Directions

While this project explored late fusion, modern approaches might consider:

- Early Fusion: Fusing raw sensor data for richer feature extraction

- Deep Learning Fusion: End-to-end neural networks for joint processing

- Adaptive Fusion: Dynamic weighting based on environmental conditions

- Additional Sensors: Incorporating LiDAR or thermal cameras